Building Microservice-Ready Monoliths: Lessons from a Workflow Engine

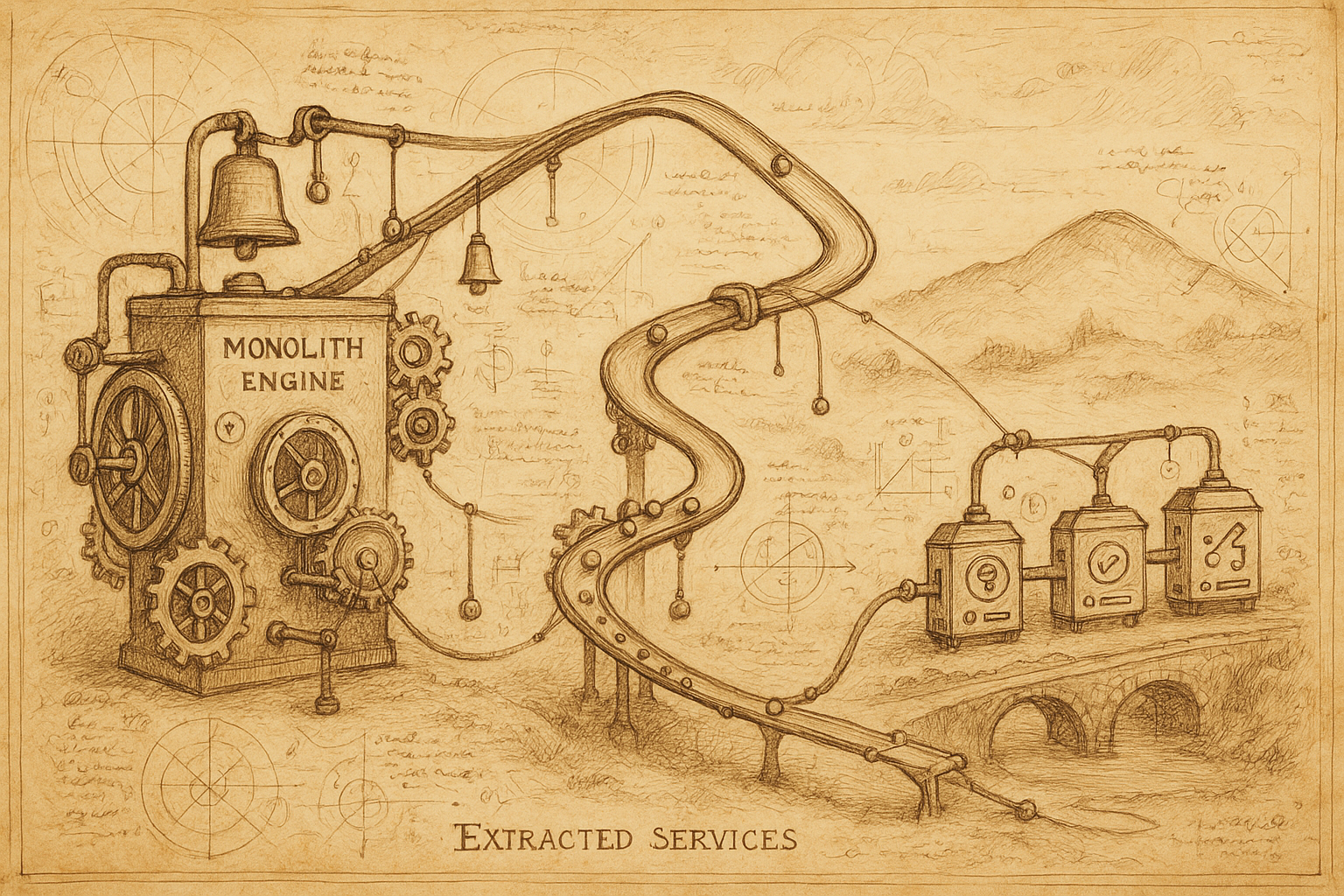

How we designed a monolithic workflow engine that could become microservices without any refactoring - by building service boundaries from day one.

There's this moment in every startup's life when you're staring at a 2800-line function that provisions Kubernetes clusters, and you realize you've got a problem. Not just a "this is hard to test" problem, but a "how do we ever scale this team" problem.

We had a choice: rewrite everything as microservices immediately, or find a way to build something that could evolve gracefully. We chose a third option that I haven't seen talked about much - building what I call "microservice-ready monoliths."

The 2800-Line Monster

Our cluster provisioning logic had grown organically. What started as a simple "create cluster" endpoint had absorbed user validation, resource checking, Hetzner API calls, DNS configuration, monitoring setup, and error handling. Classic startup code evolution.

| 1 | func CreateCluster(ctx context.Context, req *CreateClusterRequest) (*Cluster, error) { |

| 2 | // Validate user permissions (200 lines) |

| 3 | // Check resource quotas (300 lines) |

| 4 | // Call Hetzner API (400 lines) |

| 5 | // Configure DNS (250 lines) |

| 6 | // Setup monitoring (350 lines) |

| 7 | // Handle rollback scenarios (500 lines) |

| 8 | // Update database state (800 lines) |

| 9 | // ... you get the idea |

| 10 | } |

The function worked, but it was impossible to test individual pieces, and every change required understanding the entire flow. We needed to break it apart without stopping feature development.

The Microservice-Ready Approach

Instead of immediately extracting services, we refactored the monolith as if each component was already a separate service. The key insight: if you design internal boundaries correctly, extracting services later requires zero code changes.

Here's what we built:

| 1 | type WorkflowEngine struct { |

| 2 | userValidator UserValidator |

| 3 | resourceChecker ResourceChecker |

| 4 | clusterProvisioner ClusterProvisioner |

| 5 | dnsConfigurator DNSConfigurator |

| 6 | eventPublisher EventPublisher |

| 7 | } |

| 8 | |

| 9 | func (w *WorkflowEngine) ExecuteWorkflow(ctx context.Context, workflow *Workflow) error { |

| 10 | for _, step := range workflow.Steps { |

| 11 | if err := w.executeStep(ctx, step); err != nil { |

| 12 | return w.handleStepFailure(ctx, step, err) |

| 13 | } |

| 14 | |

| 15 | w.eventPublisher.Publish(ctx, StepCompleted{StepID: step.ID}) |

| 16 | } |

| 17 | |

| 18 | return nil |

| 19 | } |

Service Boundaries Within the Monolith

Each component was designed with clear interfaces and responsibilities:

| 1 | // UserValidator - handles all user permission logic |

| 2 | type UserValidator interface { |

| 3 | ValidateClusterCreation(ctx context.Context, userID string, req ClusterRequest) error |

| 4 | CheckQuotas(ctx context.Context, userID string) (*QuotaStatus, error) |

| 5 | } |

| 6 | |

| 7 | // ClusterProvisioner - handles cloud provider interactions |

| 8 | type ClusterProvisioner interface { |

| 9 | CreateCluster(ctx context.Context, spec ClusterSpec) (*Cluster, error) |

| 10 | DeleteCluster(ctx context.Context, clusterID string) error |

| 11 | GetClusterStatus(ctx context.Context, clusterID string) (*ClusterStatus, error) |

| 12 | } |

| 13 | |

| 14 | // DNSConfigurator - handles DNS and networking |

| 15 | type DNSConfigurator interface { |

| 16 | ConfigureDNS(ctx context.Context, cluster *Cluster) error |

| 17 | SetupLoadBalancer(ctx context.Context, cluster *Cluster) error |

| 18 | } |

Notice how each interface represents what would eventually become a separate microservice. But initially, they all lived in the same binary.

Event-Driven Communication

Instead of direct function calls between services, we used events for coordination:

| 1 | type Event interface { |

| 2 | Type() string |

| 3 | Payload() map[string]interface{} |

| 4 | } |

| 5 | |

| 6 | type StepCompleted struct { |

| 7 | StepID string |

| 8 | WorkflowID string |

| 9 | Output map[string]interface{} |

| 10 | } |

| 11 | |

| 12 | func (s StepCompleted) Type() string { return "step.completed" } |

| 13 | func (s StepCompleted) Payload() map[string]interface{} { |

| 14 | return map[string]interface{}{ |

| 15 | "step_id": s.StepID, |

| 16 | "workflow_id": s.WorkflowID, |

| 17 | "output": s.Output, |

| 18 | } |

| 19 | } |

This event-driven approach meant that when we later extracted services, they could communicate via message queues without changing the application logic.

Testing Becomes Trivial

With clear service boundaries, unit testing became straightforward:

| 1 | func TestWorkflowExecution(t *testing.T) { |

| 2 | mockValidator := &MockUserValidator{} |

| 3 | mockProvisioner := &MockClusterProvisioner{} |

| 4 | eventBus := &MockEventBus{} |

| 5 | |

| 6 | engine := &WorkflowEngine{ |

| 7 | providers: map[string]ClusterProvider{ |

| 8 | "hetzner": mockProvider, |

| 9 | }, |

| 10 | eventBus: eventBus, |

| 11 | } |

| 12 | |

| 13 | workflow := &Workflow{ |

| 14 | Steps: []Step{ |

| 15 | {Type: "validate_user", UserID: "user123"}, |

| 16 | {Type: "create_cluster", Spec: clusterSpec}, |

| 17 | }, |

| 18 | } |

| 19 | |

| 20 | err := engine.ExecuteWorkflow(context.Background(), workflow) |

| 21 | assert.NoError(t, err) |

| 22 | assert.Equal(t, 2, len(eventBus.PublishedEvents)) |

| 23 | } |

Each component could be mocked independently, making tests fast and reliable.

The Extraction Moment

Six months later, as our team grew and we needed better scalability, extracting the first microservice was surprisingly simple. The user validation service became its own binary with minimal changes:

- Wrap the existing UserValidator with an HTTP API

- Update the workflow engine to call the HTTP endpoint instead of the local implementation

- Deploy and monitor

The interface didn't change. The business logic didn't change. We just changed the implementation from in-process to over-the-network.

Lessons Learned

Building microservice-ready monoliths taught us several valuable lessons:

- Design for eventual extraction - Think about service boundaries from day one, even if you're building a monolith

- Interfaces are your friend - Clear interfaces make testing easier and service extraction trivial

- Events enable loose coupling - Event-driven architecture works within monoliths too

- Gradual evolution beats big rewrites - Extract services when you need them, not because microservices are trendy

- Monoliths can be well-architected - The deployment model doesn't determine code quality

When to Extract

We developed clear criteria for when to extract a service:

- Team boundaries - When different teams need to own different components

- Scaling requirements - When parts of the system need different scaling characteristics

- Technology needs - When a component would benefit from a different tech stack

- Deployment frequency - When a component needs to be deployed independently

Notice that none of these are technical debt or code organization issues. Those problems can be solved within a monolith.

The Result

Two years later, we've extracted four services from our original monolith. Each extraction was smooth, with no business logic changes required. The remaining monolith handles the core workflow orchestration, while specialized services handle user management, cluster provisioning, DNS, and monitoring.

Most importantly, we never had a "microservices rewrite" project. We evolved our architecture gradually, extracting services only when we had clear business reasons to do so.

Sometimes the best architecture decision is building something that can become what you need it to be, rather than trying to predict the future perfectly.